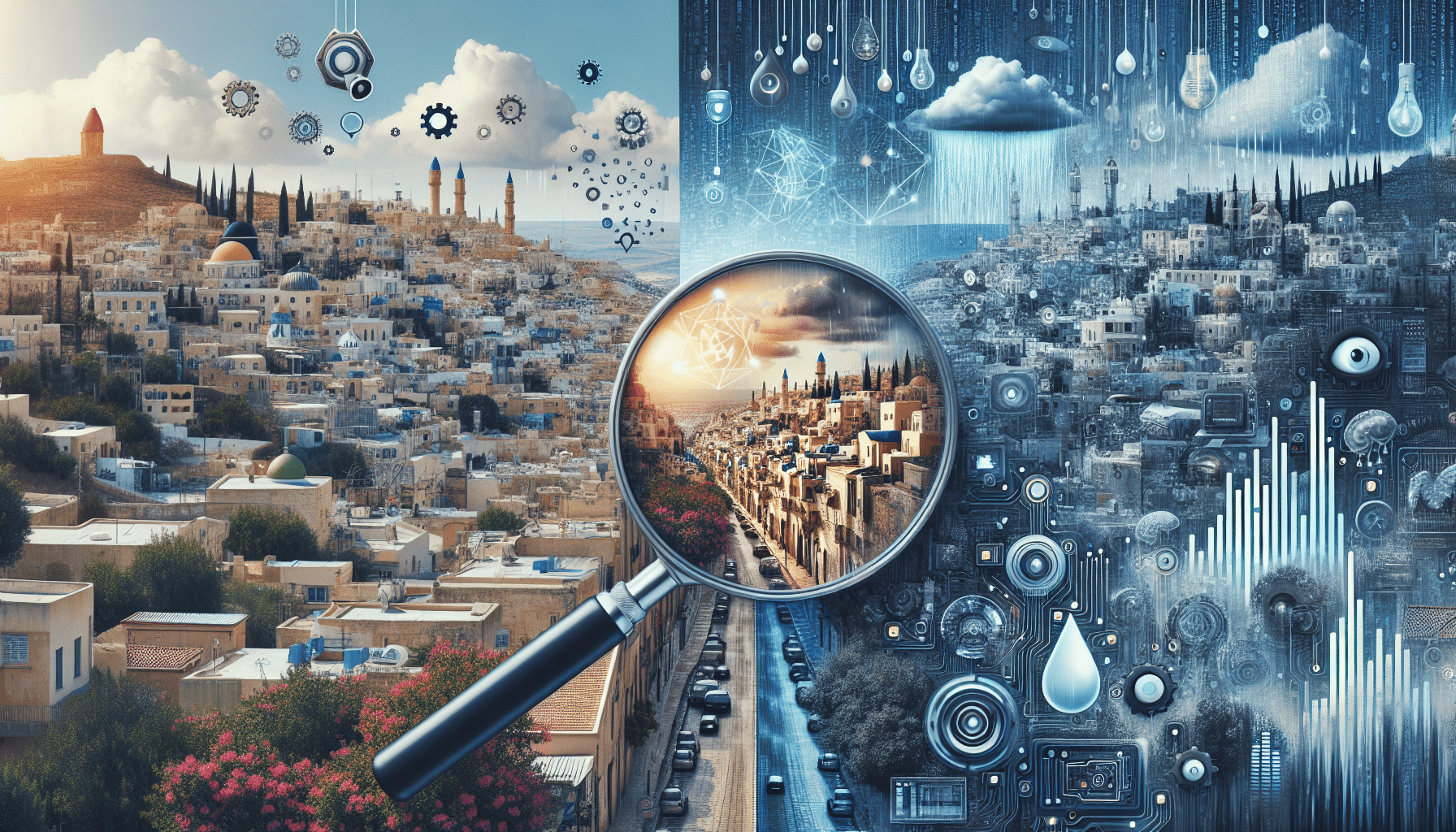

Imagine staring at an image of a flooded street with cars tossed around, just like the dramatic scenes in Valencia. You might wonder, is this real or is it the clever work of an AI image generator? In today’s world, tools like Midjourney and Dall-E create lifelike images with ease, stirring debates about what’s real and what’s crafted by AI. It’s crucial for solopreneurs like you to verify image authenticity, especially when social media platforms spread AI content for engagement. Join me as we explore ways to tell real images from AI fakes, using recent tools and examples from events like the Valencia “rain bomb” flood. This journey will help us understand the role of AI in media today and prepare us to face these challenges head-on.

Understanding AI Image Generators

AI image generators, such as Midjourney and Dall-E, are advanced tools designed to create digital images from scratch using artificial intelligence algorithms. These generators function by interpreting text-based prompts and transforming them into visual media, allowing for the creation of vivid artwork or realistic images without any direct human drawing.

These technologies are increasingly being used across a spectrum of industries. For instance, content creators and digital marketers employ them to craft compelling visual content that enhances engagement. This utility extends to designing graphics for gaming, advertisements, and film, where quick, automated generation of assets is invaluable.

An important aspect of AI image generators is the challenge they pose in distinguishing between AI-created images and genuine photographs. As these tools are capable of producing highly realistic visuals, they blur the lines between what is real and what is artificially constructed. This technological leap forward raises significant questions about authenticity and trust, affecting how society perceives digital content.

The societal implications are profound, as AI-generated images can contribute to misinformation if not properly identified, leading to skepticism and a potential erosion of trust in digital media. This underscores the need for tools and practices to verify the authenticity of media, helping to maintain accurate and reliable information dissemination in an increasingly AI-driven world.## Valencia Flood: Real vs. AI Skepticism

The "Rain Bomb" Event Recap

Valencia recently experienced a severe meteorological phenomenon labeled as a "rain bomb," which resulted in a deluge of rain equivalent to a year's worth in just a single day. This extreme weather event caused widespread damage, particularly in urban areas where infrastructure was unable to cope with the sudden influx of water. Among the most striking scenes were streets littered with overturned cars and debris, evocative of post-apocalyptic visuals. Such images quickly gained traction across various social media platforms, stimulating widespread discussion and sharing among users.

Social Media Reaction

The visually dramatic nature of the Valencia flood photographs soon captured the attention of social media users. However, in today's digital age where AI image generators like Midjourney and Dall-E are prevalent, the sharpness and surreal qualities of these images led to skepticism. Users speculated that these images might be the result of sophisticated AI manipulations. Comments flooded in questioning the authenticity of the visuals, with many assuming that what appeared to be a stark depiction of nature's fury could instead be a digital concoction. This reaction underscores a significant issue in the current digital landscape: the blurring lines between real-life events and AI-generated simulations, making it increasingly challenging for audiences to discern genuine content.## Discerning Authenticity: Techniques and Tools

With the increasing sophistication of AI image generators, distinguishing between real and AI-generated images has become a critical skill. In the case of the Valencia flood photograph, skeptics questioned its authenticity due to its sharp, surreal quality. However, verifying such images is now more essential than ever to maintain credibility and public trust.

Verification Methods

One approach to confirming image authenticity involves using maps and street views. By locating landmarks or recognizable features in an image, one can corroborate their existence in the real world. For instance, in the Valencia flood scenario, verifying locations with Google Maps or comparing them with satellite images can clarify doubts about the photograph's honesty. Additionally, cross-referencing images with weather reports or news articles provides context and aligns the visual evidence with factual occurrences, reinforcing its credibility.

Spotting AI-Generated Images

AI-generated images often contain subtle anomalies that can betray their artificial origins. These include unnatural textures, lighting inconsistencies, or elements that don't adhere to physical laws, such as warped objects or improbable reflections. Tools like Dall-E and Midjourney are known for producing high-quality visuals, but they occasionally exhibit such telltale signs. Moreover, AI image generators might create images with repetitive patterns or peculiar distortions, signaling their synthetic nature. By honing the ability to recognize these signs, viewers can evaluate images more critically, discerning reality from sophisticated mimicry.## Rise of AI-Generated Content

As AI tools become increasingly dominant in the digital landscape, the term "AI slop" has emerged, capturing the often overwhelming influx of AI-generated content. AI slop refers to the excessive generation of artificial imagery and media that can muddy the waters of genuine content. This rampant production, facilitated by AI image generators like Midjourney and Dall-E, poses a significant challenge to discerning real from fabricated visuals. The constant overflow of such content can erode trust in digital media, as audiences grapple with verifying authenticity amidst a sea of AI outputs.

Social Media's Role

Social media platforms are pivotal in the propagation of AI-generated content. By prioritizing engagement, these platforms often employ algorithms that highlight and spread content with high virality potential, regardless of its authenticity. This strategy inadvertently elevates AI-generated images that are often more visually striking or controversial, drawing more eyes and interactions. While beneficial for platforms' engagement metrics, this practice can amplify skepticism among users, making it increasingly difficult for the public to differentiate between real and AI-manipulated images. Moreover, viral AI creations sometimes eclipse authentic events, leading to a blend of entertainment and misinformation.## Why AI Content Is Popular and Profitable

In recent years, AI-generated content has steadily gained popularity among digital creators due to its efficiency and low production costs. AI image generators, like Midjourney and Dall-E, have made content creation accessible, saving time and effort traditionally required in media production. These tools allow artists and marketers to produce high-quality visuals rapidly, fostering creativity and enabling quick turnaround in projects. For instance, viral campaigns leveraging AI visuals can swiftly capture public imagination, akin to trends seen with the viral phenomenon of deepfake videos. This ease of use and speed helps creators maintain relevance and increase interaction rates on social media platforms.

Economically, social platforms and creators benefit immensely from AI-generated content. Platforms like Meta leverage algorithms to promote engaging, AI-crafted content that captures user attention, prolonging time spent on their apps. This model significantly boosts advertisements and sponsored content views, translating into increased revenue. According to recent statistics, posts containing AI-crafted visuals receive higher engagement rates, often translating into better ad placements and higher streams of income for creators. This positive feedback loop prompts not only sustained creativity but fuels the digital economy, reinforcing AI-generated content's standing as a lucrative endeavor. This profitability ensures the steady growth of AI tools and their continued integration into digital strategies worldwide.## Ethical Concerns of AI in Media

Information Integrity

The rapid proliferation of AI-generated media introduces a significant risk to information integrity, primarily through the dissemination of misinformation. AI image generators can create hyper-realistic images that blur the lines between reality and fabrication, leading to false narratives gaining traction. Deepfakes, a particularly worrisome application, enable the creation of realistic yet entirely fabricated videos, potentially distorting political events, public figures' actions, or historical events. These deepfakes threaten to undermine public confidence in media, as the authenticity of visual content becomes harder to ascertain without expert intervention.

Impact on Trust

The infiltration of AI into media has profound implications for public trust. As consumers encounter an increasing volume of AI-generated content, skepticism about the truthfulness of visual media intensifies. This distrust can erode the foundational belief in news agencies and social platforms as pillars of credible information. To counteract this trend, it's vital to implement strategies that rebuild trust. Media literacy programs that educate the public on identifying AI-generated content, alongside technological advances in detection algorithms, can help reestablish confidence. Moreover, ethical guidelines for AI usage in media creation and distribution must be enforced to safeguard integrity and maintain public trust in digital information.## Preparing for an AI Future

As AI tools continue to gain prominence in crafting digital content, preparing for a future where AI plays a central role means enhancing digital literacy and establishing clear regulations.

Enhancing Digital Literacy

Understanding AI-generated content and learning to differentiate between real and digitally-created images is becoming increasingly essential. With powerful AI image generators like Midjourney and Dall-E making it challenging to spot fabrications, people need to adopt a critical approach. Simple verification steps can help: checking image metadata, using reverse image search tools, and cross-referencing with reliable news sources can confirm authenticity. Developing an eye for odd details—such as inconsistent textures or unnatural lighting—can also aid in identifying AI origin.

Regulations and Policies

The widespread use of AI-generated content necessitates robust regulations to prevent misinformation and ensure ethical practices. Establishing clear rules on AI content distribution will help protect against the spread of false information and build public trust in media. Case studies from countries like the European Union illustrate the potential benefits of regulations aimed at transparency and accountability in AI-generated content. Such frameworks not only safeguard authenticity but also shield consumers from deceptive practices, fostering a responsible and informed digital ecosystem as we advance into a future deeply intertwined with AI technology.## Identifying AI-Generated Videos

Delving into the world of AI-generated videos reveals the increasing sophistication of tools used to produce and edit visual content. Popular applications like Adobe Premiere Pro, enhanced with Sensei AI, offer features such as scene detection and automatic aspect ratio adjustments designed to simplify the editing process and optimize content for various platforms. Similarly, Runway ML empowers creators with advanced capabilities, including background removal and effects generation through text commands, showcasing the seamless integration of AI in the creative process.

Understanding these tools is crucial for recognizing AI-generated content. Videos created with AI might exhibit certain telltale signs, such as perfect or improbable lighting, or movements that seem unnaturally fluid. Spotting these requires a keen eye and knowledge of video production subtleties. When verifying the authenticity of a video, consider employing metadata analysis and specialized software to examine frame inconsistencies or anomalies related to compression artifacts, which might be less sophisticated in AI-generated outputs.

For example, tools such as InVID and Deepware Scanner provide capabilities to analyze and trace the origins of a video. They can inspect metadata and flag suspicious content, offering essential assistance in distinguishing between genuine footage and AI-generated creations. As AI continues to evolve, staying ahead with these tools and techniques becomes vital to ensuring the integrity of content consumed and shared in the digital sphere.## FAQs on AI Image Generators

-

What is an AI image generator?

An AI image generator is a software application that uses artificial intelligence algorithms to create visual images from textual descriptions or input data. Tools like Midjourney and Dall-E are popular AI image generators known for their ability to produce detailed and intricate images that mimic real-life features or completely invent imaginative scenes. These technologies are widely used in fields such as digital media creation, advertising, and entertainment to enhance visual content and storytelling. -

How to spot AI-generated images?

Identifying AI-generated images can be challenging, but there are certain tell-tale signs. Look for anomalies in texture or lighting, such as overly smooth surfaces or inconsistent shadowing. AI can also generate awkward features, like warped text or unnatural patterns that do not correspond to typical camera or photo editing errors. Examining the metadata associated with digital images can also provide clues, as some AI tools leave distinct markers in the data. -

Why skepticism around digital images?

The skepticism surrounding digital images arises from the increasing sophistication of AI image generators, which create visuals that are strikingly realistic yet artificial. This capability blurs the line between reality and fabrication, leading audiences to question the authenticity of the images they encounter, as seen in the reaction to the Valencia Flood photograph. The deluge of AI-created content online has made it more difficult to trust digital media, fueling doubts about its reality.

-

Ethical challenges with AI content?

The creation and dissemination of AI-generated content pose several ethical challenges, including the potential spread of misinformation and manipulation. AI technologies like deepfakes can forge realistic images and videos, complicating issues of consent and authenticity. This can lead to harmful effects, such as the erosion of public trust in media and increased susceptibility to false narratives. Navigating these ethical waters requires both technological literacy and robust legal frameworks. -

Protecting against AI misinformation?

To guard against AI misinformation, it's vital to cultivate digital literacy and skepticism. Verifying the source of an image and cross-referencing with credible news and weather reports can aid in discerning legitimacy. Utilize online tools and applications that specialize in detecting AI content or unusual digital footprints. Educating oneself on common AI patterns and anomalies can also empower users to critically evaluate content authenticity, helping preserve the integrity of information sharing.## Conclusion

In an age where AI advancements blur the lines between the authentic and the artificial, the importance of distinguishing real images from AI-generated content becomes ever more crucial. The "rain bomb" event in Valencia serves as a case study in understanding how AI's fingerprints can cast doubt on genuine occurrences. As AI image generators like Midjourney and Dall-E become more sophisticated, they heighten the challenge of discerning reality in our daily digital consumption.

To navigate this evolving landscape, it is imperative to stay updated and informed. Digital literacy is not just a buzzword; it is a necessity in ensuring that we can trust what we see and engage with online. By honing our skills in recognizing AI traits—such as unusual patterns or overly perfect imagery—we empower ourselves to question and verify the media we consume. This vigilance extends beyond individual efforts to a societal level, encouraging policies and practices that promote transparency and truthfulness in the digital realm.

As we continue to embrace technology's benefits, balancing enthusiasm with scrutiny will be key. By understanding AI's potential and limitations, we safeguard not only the integrity of information but also confidence in our ability to discern fact from fiction in an increasingly complex media landscape.

AI image generators, like Midjourney and Dall-E, redefine how we view digital content. These tools create stunning visuals, but their rise also breeds skepticism, as seen in Valencia’s “rain bomb” images. Social media spreads such images, blurring lines between reality and AI fabrication. To discern truth, use maps and reports to verify images, looking for odd textures that suggest AI origins. AI’s ease and profitability entice creators, yet they raise ethical concerns and impact trust in media.

Staying informed and discerning is crucial in this AI-driven era. Implement simple checks using available software to ensure image authenticity. Engage with this fascinating mix of technology and trust, and remain adaptable in navigating a future rich in AI innovation.

Embrace tools and tips shared here to harness the potential of AI image generators responsibly. Stay curious and proactive in understanding digital literacy and ethical implications, ready to thrive in an evolving media landscape.